内容纲要

概要描述

2.1.4及之后版本默认为mgr架构,不需要手动进行处理

建议安装Advisor,可以在后台检测以及进行选主的操作。

特殊情况下需进去手动切换,需进入到底层mysql。

详细说明

测试环境:kundb-2.1.8-final

- 手动切换,执行 group_replication_set_as_primary 手动切换

- 通过 kundb advisor,优雅切换(推荐方式)

方案一. 底层 mysql 执行 group_replication_set_as_primary

[root@kv2~]# kubectl get pods -owide|grep kundb1

kundb-kungate-kundb1-646f87f668-4llht 1/1 Running 0 42h 172.22.23.3 kv3

kundb-kungate-kundb1-646f87f668-ffqnt 1/1 Running 0 42h 172.22.23.2 kv2

kundb-kungate-kundb1-646f87f668-sqnjw 1/1 Running 0 42h 172.22.23.4 kv4

# 进入任一个kungate pod

[root@kv2~]# kubectl exec -it kundb-kungate-kundb1-646f87f668-sqnjw bash

# 进入底层mysql

root@kv4:/vt/manager# mysql --socket=/vdir/mnt/disk1/kundb1/kundbdata/mysql.sock -p'TEwD8*9#Qm!Pd&AG'

# 查询performance_schema.replication_group_members表

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | 690e516f-382b-11ee-9559-be9416f75e51 | kv4 | 15006 | ONLINE | PRIMARY | 8.0.30 | XCom |

| group_replication_applier | 6916ff25-382b-11ee-ab46-8a6f5b87ea5a | kv2 | 15006 | ONLINE | SECONDARY | 8.0.30 | XCom |

| group_replication_applier | 69628fc1-382b-11ee-bcd5-8e01529700b9 | kv3 | 15006 | ONLINE | SECONDARY | 8.0.30 | XCom |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)

# 前面的信息可以看到,主节点是kv4,这里我们将主切换成kv2

# 其中6916ff25-382b-11ee-ab46-8a6f5b87ea5a 为需要切换节点的MEMBER_ID

mysql> select group_replication_set_as_primary('6916ff25-382b-11ee-ab46-8a6f5b87ea5a');

+--------------------------------------------------------------------------+

| group_replication_set_as_primary('6916ff25-382b-11ee-ab46-8a6f5b87ea5a') |

+--------------------------------------------------------------------------+

| Primary server switched to: 6916ff25-382b-11ee-ab46-8a6f5b87ea5a |

+--------------------------------------------------------------------------+

1 row in set (0.28 sec)

# 再次查看,kv2变成了 PRIMARY

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | 690e516f-382b-11ee-9559-be9416f75e51 | kv4 | 15006 | ONLINE | SECONDARY | 8.0.30 | XCom |

| group_replication_applier | 6916ff25-382b-11ee-ab46-8a6f5b87ea5a | kv2 | 15006 | ONLINE | PRIMARY | 8.0.30 | XCom |

| group_replication_applier | 69628fc1-382b-11ee-bcd5-8e01529700b9 | kv3 | 15006 | ONLINE | SECONDARY | 8.0.30 | XCom |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)

方案二. Advisor切换

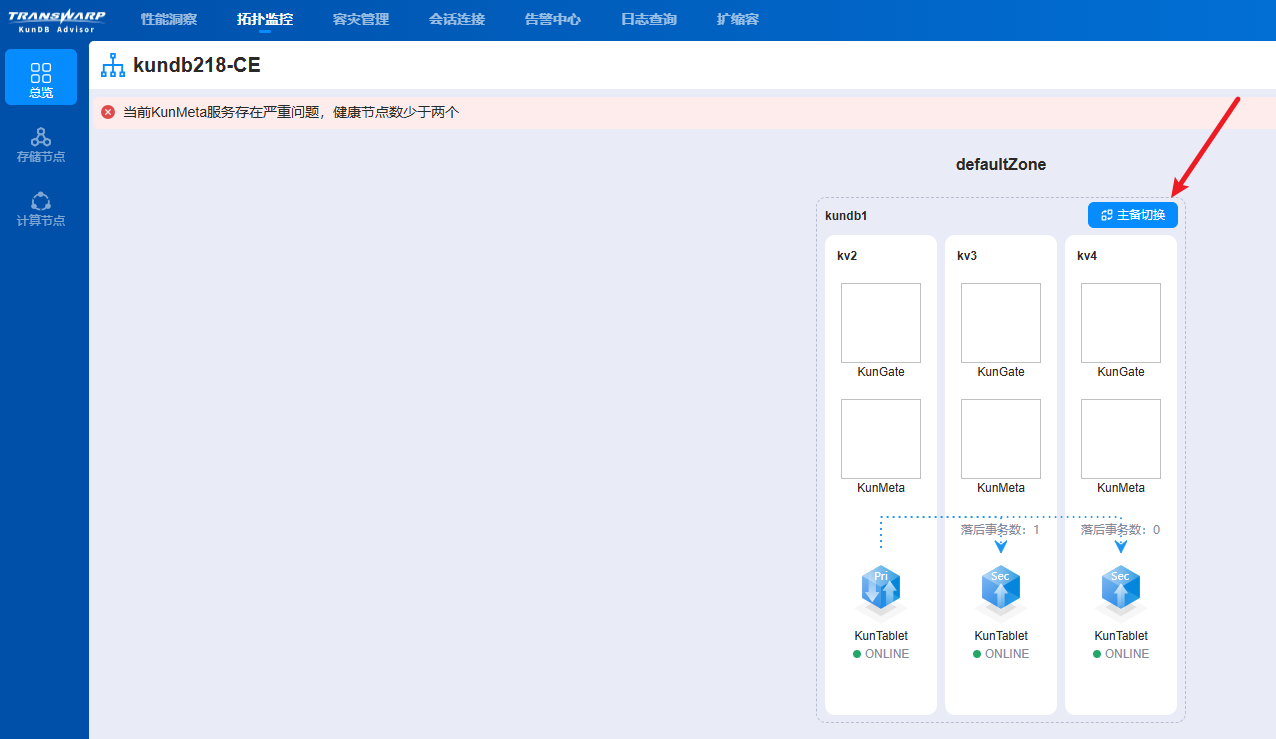

进入KUNDBA服务,找到对应的kundb服务进入,点击下面的主备切换按钮

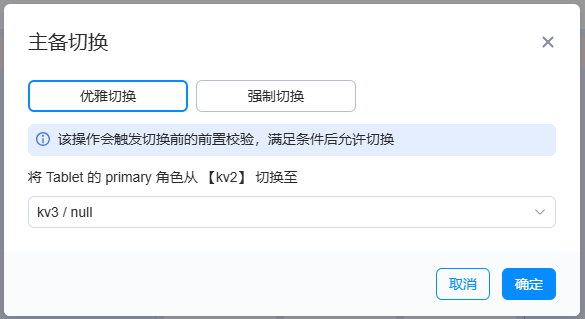

选择优雅切换,从kv2切换至kv3,点击确定

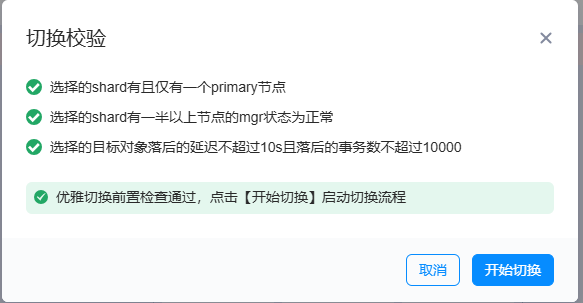

切换前需要确保集群的健康状态,以及流水落后现象不严重。如果不满足条件,需要做相应的修复后再进行操作

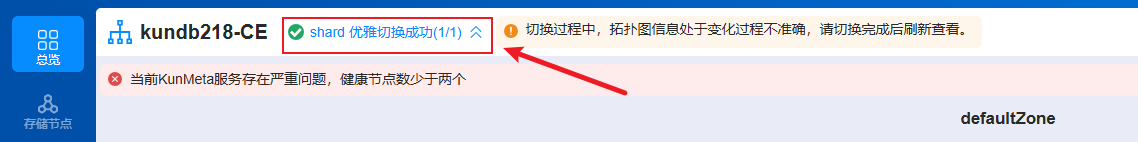

等待一会之后,页面会提示 shard 优雅切换成功(1/1)

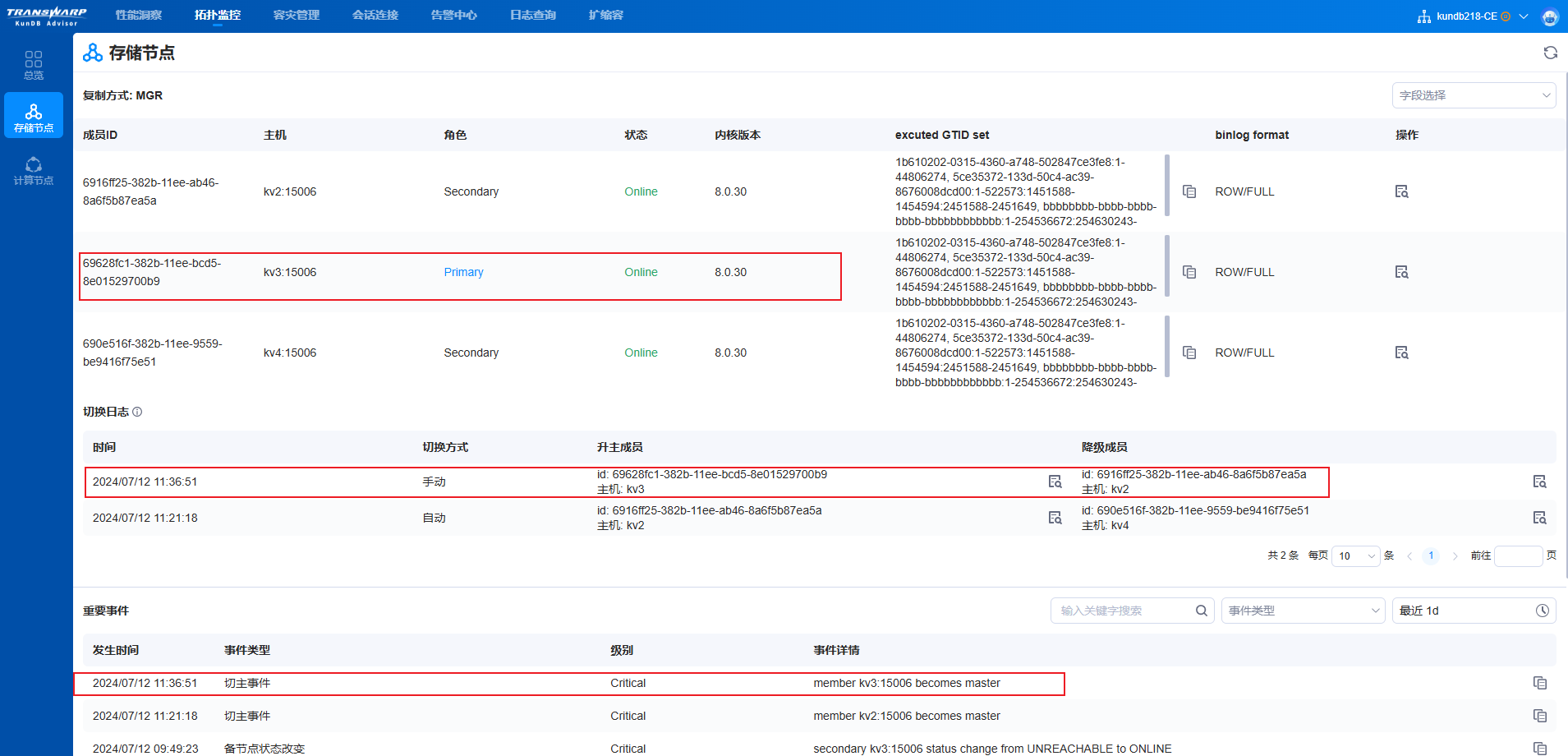

点击存储节点,可以看到切换记录