概要描述

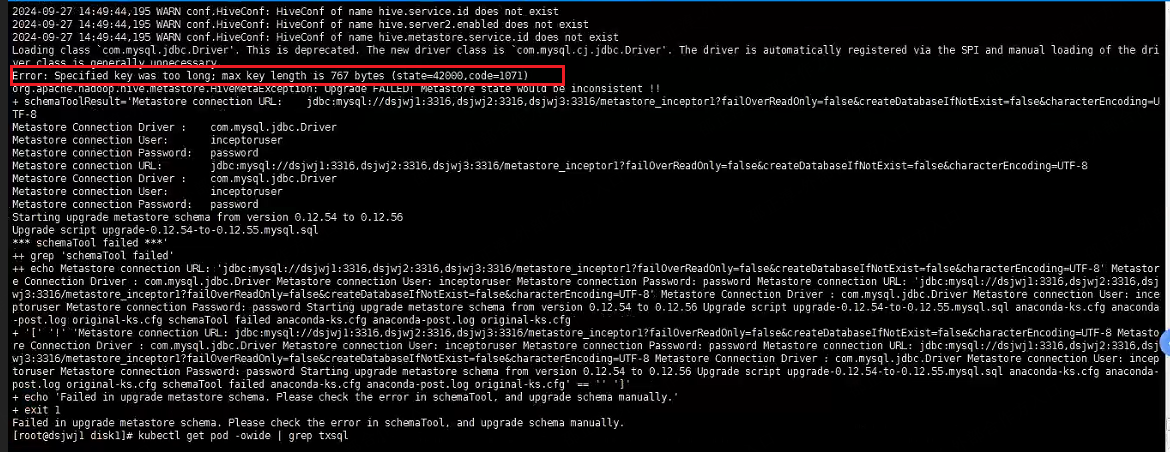

argodb5.2环境,共享inceptor6.2.2 的txsql元数据,更新最新patch之后,quark metastore无法启动,报错 Specified key was too long; max key length is 767 bytes

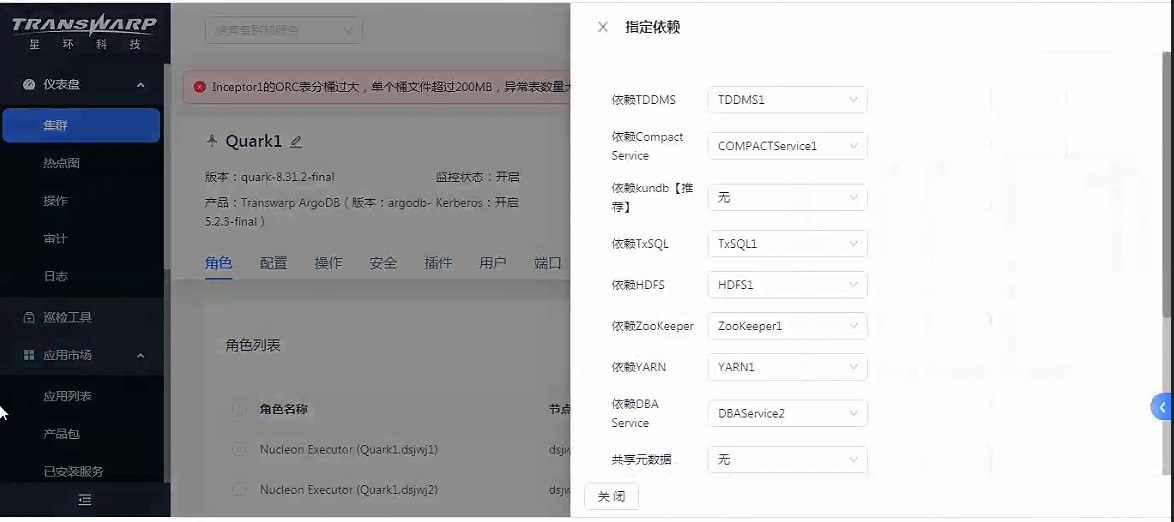

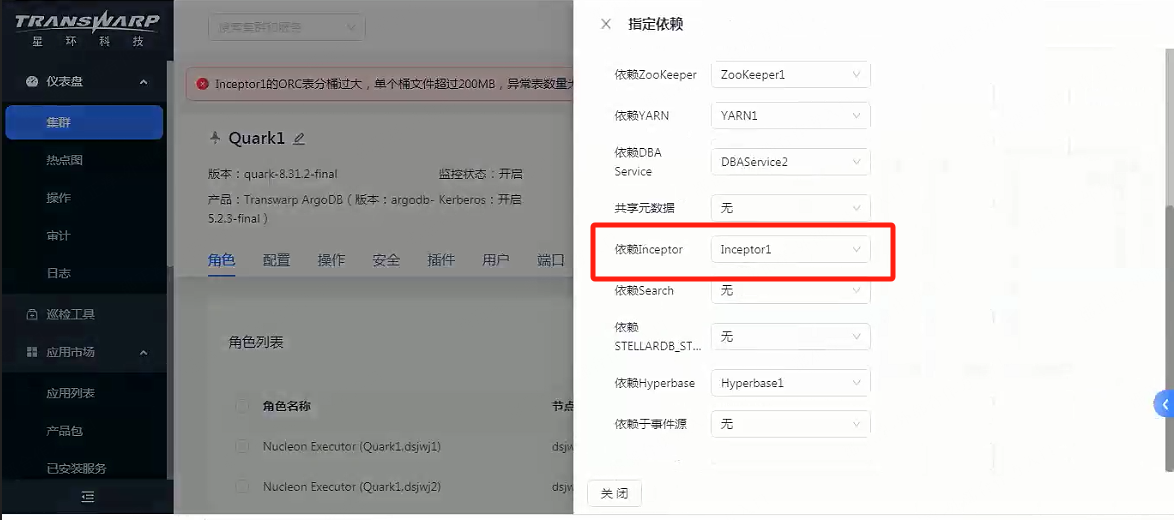

Quark依赖信息:

排查思路

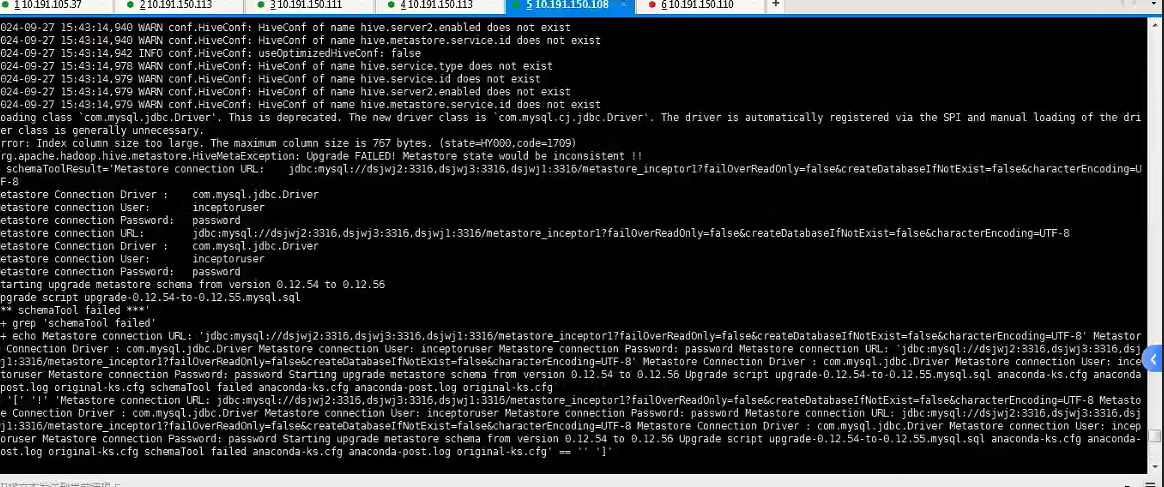

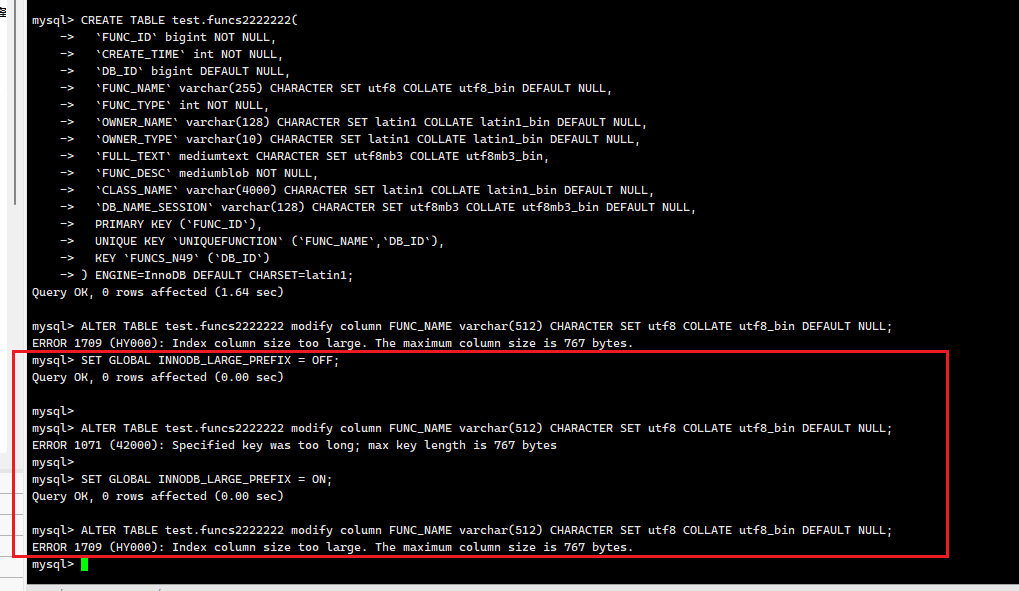

根据该报错 Specified key was too long; max key length is 767 bytes,在txsql中尝试配置 SET GLOBAL INNODB_LARGE_PREFIX = ON;, 再重启metastore,报错变成 Index column size too large. The maximun column size is 767 bytes

从报错日志可以看到,是 在对做 Upgrade script upgrade-0.12.54-to-0.12.55.mysql.sql 元数据升级 的时候抛出的异常,

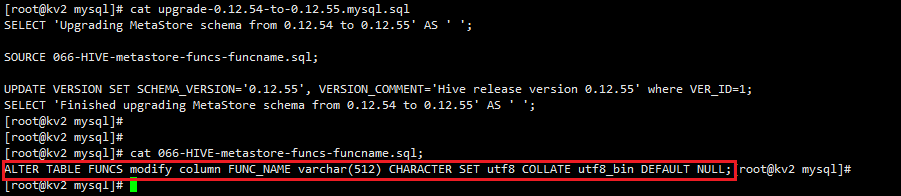

到 inceptor pod内 查看 /usr/lib/hive/scripts/metastore/upgrade/mysql/upgrade-0.12.54-to-0.12.55.mysql.sql,

可以看到,该upgrade升级操作,是如下内容:

ALTER TABLE FUNCS modify column FUNC_NAME varchar(512) CHARACTER SET utf8 COLLATE utf8_bin DEFAULT NULL;

对FUNCS表手动做ALTER操作也能够复现该问题:

解决方案

方案一:txsql迁移到kundb (优先推荐!!!)

方案二:修改字符集为latin1

缺点:不支持中文

方案三:去掉该元数据升级步骤

重做quark镜像,注释掉 /usr/lib/hive/scripts/metastore/upgrade/mysql/upgrade-0.12.54-to-0.12.55.mysql.sql 中的 SOURCE 066-HIVE-metastore-funcs-funcname.sql。

建议客户控制 库名+func/proc名 长度+参数个数,否则会导致FUNC_NAME字段过长。

缺点:库名+func/proc名+参数过多,创建function/procedure时会报错 Attempt to store value "_z54_d7defaultp0proc_this_is_a_proc_with_24_varchar2_paramsvarchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2" in column "FUNC_NAME" that has maximum length of 255. Please correct your data!

FAQ:

select func_name,length(func_name) as ln from metastore_quark1.funcs f order by ln desc limit 10 ;查询结果 length(func_name) 需要控制在255 以内。

create or replace procedure

proc_this_is_a_proc_with_24_varchar2_params (

a35 varchar2(100),

a36 varchar2(100),

a37 varchar2(100),

a38 varchar2(100),

a39 varchar2(100),

a40 varchar2(100),

a41 varchar2(100),

a42 varchar2(100),

a43 varchar2(100),

a44 varchar2(100),

a45 varchar2(100),

a46 varchar2(100),

a47 varchar2(100),

a48 varchar2(100),

a49 varchar2(100),

a50 varchar2(100),

a51 varchar2(100),

a52 varchar2(100),

a53 varchar2(100),

a54 varchar2(100),

a55 varchar2(100),

a56 varchar2(100),

a57 varchar2(100),

a58 varchar2(100),

a59 varchar2(100)

)

is

declare

a int

begin

dbms_output.put_line("proc_this_is_a_proc_with_24_varchar2_params")

end;

报错:SQL 错误 [40000] [08S01]: EXECUTION FAILED: Task CREATE PLSQL FUNCTION error HiveException: [Error 40000] javax.jdo.JDOFatalUserException: Attempt to store value "_z54_d7defaultp0proc_this_is_a_proc_with_24_varchar2_paramsvarchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2" in column "FUNC_NAME" that has maximum length of 255. Please correct your data!

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:616)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:720)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:740)

at org.apache.hadoop.hive.metastore.ObjectStore.createFunction(ObjectStore.java:10897)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:483)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:98)

at com.sun.proxy.$Proxy11.createFunction(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.create_function(HiveMetaStore.java:956)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:483)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:102)

at com.sun.proxy.$Proxy12.create_function(Unknown Source)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$create_function.getResult(ThriftHiveMetastore.java:21987)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$create_function.getResult(ThriftHiveMetastore.java:21971)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge$Server$TUGIAssumingProcessor$1.run(HadoopThriftAuthBridge.java:692)

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge$Server$TUGIAssumingProcessor$1.run(HadoopThriftAuthBridge.java:687)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:2197)

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge$Server$TUGIAssumingProcessor.process(HadoopThriftAuthBridge.java:687)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:285)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

NestedThrowablesStackTrace:

Attempt to store value "_z54_d7defaultp0proc_this_is_a_proc_with_24_varchar2_paramsvarchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2" in column "FUNC_NAME" that has maximum length of 255. Please correct your data!

org.datanucleus.exceptions.NucleusUserException: Attempt to store value "_z54_d7defaultp0proc_this_is_a_proc_with_24_varchar2_paramsvarchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2varchar2" in column "FUNC_NAME" that has maximum length of 255. Please correct your data!

at org.datanucleus.store.rdbms.mapping.datastore.CharRDBMSMapping.setString(CharRDBMSMapping.java:254)

at org.datanucleus.store.rdbms.mapping.java.SingleFieldMapping.setString(SingleFieldMapping.java:180)

at org.datanucleus.store.rdbms.fieldmanager.ParameterSetter.storeStringField(ParameterSetter.java:158)

at org.datanucleus.state.AbstractStateManager.providedStringField(AbstractStateManager.java:1448)

at org.datanucleus.state.StateManagerImpl.providedStringField(StateManagerImpl.java:120)

at org.apache.hadoop.hive.metastore.model.MFunction.dnProvideField(MFunction.java)

at org.apache.hadoop.hive.metastore.model.MFunction.dnProvideFields(MFunction.java)

at org.datanucleus.state.StateManagerImpl.provideFields(StateManagerImpl.java:1170)

at org.datanucleus.store.rdbms.request.InsertRequest.execute(InsertRequest.java:292)

at org.datanucleus.store.rdbms.RDBMSPersistenceHandler.insertObjectInTable(RDBMSPersistenceHandler.java:162)

at org.datanucleus.store.rdbms.RDBMSPersistenceHandler.insertObject(RDBMSPersistenceHandler.java:138)

at org.datanucleus.state.StateManagerImpl.internalMakePersistent(StateManagerImpl.java:3363)

at org.datanucleus.state.StateManagerImpl.makePersistent(StateManagerImpl.java:3339)

at org.datanucleus.ExecutionContextImpl.persistObjectInternal(ExecutionContextImpl.java:2079)

at org.datanucleus.ExecutionContextImpl.persistObjectWork(ExecutionContextImpl.java:1922)

at org.datanucleus.ExecutionContextImpl.persistObject(ExecutionContextImpl.java:1777)

at org.datanucleus.ExecutionContextThreadedImpl.persistObject(ExecutionContextThreadedImpl.java:217)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:715)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:740)

at org.apache.hadoop.hive.metastore.ObjectStore.createFunction(ObjectStore.java:10897)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:483)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:98)

at com.sun.proxy.$Proxy11.createFunction(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.create_function(HiveMetaStore.java:956)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:483)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:102)

at com.sun.proxy.$Proxy12.create_function(Unknown Source)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$create_function.getResult(ThriftHiveMetastore.java:21987)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$create_function.getResult(ThriftHiveMetastore.java:21971)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge$Server$TUGIAssumingProcessor$1.run(HadoopThriftAuthBridge.java:692)

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge$Server$TUGIAssumingProcessor$1.run(HadoopThriftAuthBridge.java:687)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:2197)

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge$Server$TUGIAssumingProcessor.process(HadoopThriftAuthBridge.java:687)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:285)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)