概要描述

本文描述如何在TDH集群外部署spark客户端,连接开了安全认证的TDH集群

环境准备

| HOSTNAME | IP | 备注 |

|---|---|---|

| tdh1-600 | 172.22.16.88 | TDH6.0.2节点1,discover notebook节点 |

| tdh2-600 | 172.22.16.88 | TDH6.0.2节点2 |

| tdh3-600 | 172.22.16.88 | TDH6.0.2节点3 |

| xiannv | 172.22.16.188 | 集群外单机 |

需要保证集群外单机和集群内节点的网络放通

详细说明

1 集群外部署TDH Client客户端

下载或者拷贝TDH-Client客户端至集群172.22.16.188

source init.sh去安装krb5等相关命令,以及写入TDH集群的hostname等对应信息进/etc/hosts里

详见TDH安装手册 “8.6. 安装TDH客户端”章节

https://www.warpcloud.cn/#/documents-support/docs-detail/document/TDH-OPS/6.2/010InstallManual?docType=docs%3Fcategory%3DTDH%26index%3D0&docName=TDH%E5%AE%89%E8%A3%85%E6%89%8B%E5%86%8C

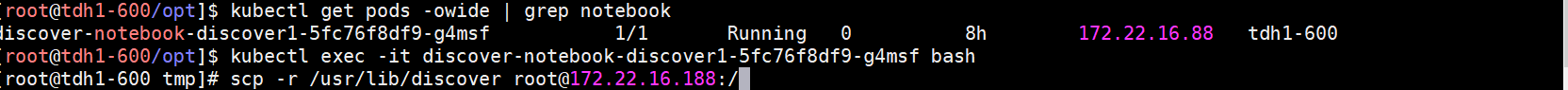

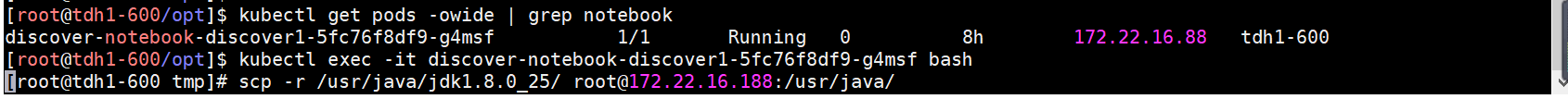

2 进入discover notebook的pod内拷贝spark相关文件夹

在TDH集群上进入discover notebook的pod

1)拷贝整个/usr/lib/discover到集群外节点172.22.16.188

2)由于Spark,scala等对于java的版本比较敏感,建议直接从pod内拷贝java环境到集群外节点

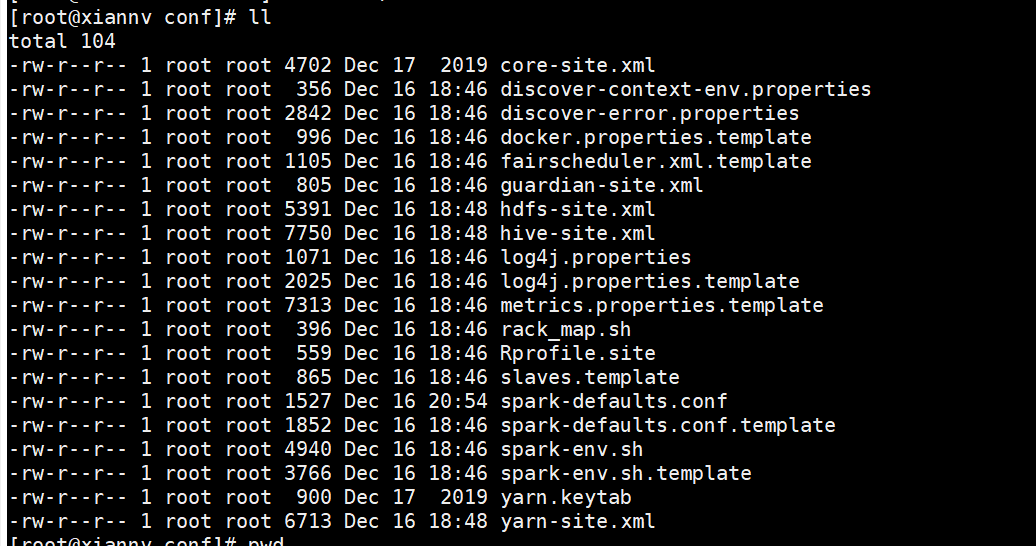

3 拷贝集群配置文件到集群外服务器

将集群的hive-site.xml,yarn-site.xml,hdfs-site.xml,core-site.xml以及yarn的keytab文件,放到集群外节点172.22.16.188的discover/conf路径下

4 集群外节点配置java环境

vim /etc/profile

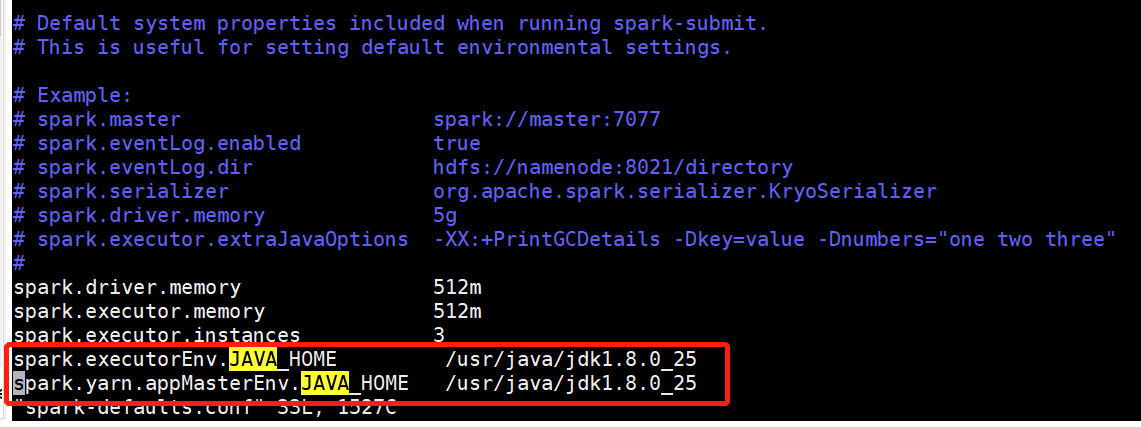

export JAVA_HOME=/usr/java/jdk1.8.0_255 确认集群外spark-defaults.conf的配置

主要是确认java环境的配置是否正确,这里是yarn的pod内的jdk路径。

如果在这里不指定的话,可以通过spark-submit命令来指定

--conf spark.yarn.appMasterEnv.JAVA_HOME=/usr/java/jdk1.8.0_25 \

--conf spark.executorEnv.JAVA_HOME=/usr/java/jdk1.8.0_25 \

6 上传或者下载相关测试jar包

cd /discover/jars

wget http://central.maven.org/maven2/io/snappydata/snappy-spark-examples_2.11/2.1.1.1/snappy-spark-examples_2.11-2.1.1.1.jar7 运行spark命令,提交任务去yarn进行验证

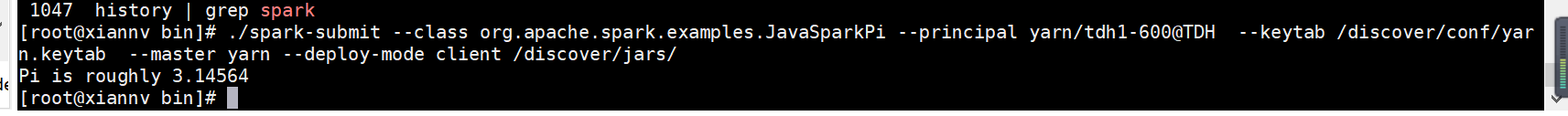

cd /discover/bin

./spark-submit --class org.apache.spark.examples.JavaSparkPi --principal yarn/tdh1-600@TDH --keytab /discover/conf/yarn.keytab --master yarn --deploy-mode client /discover/jars/

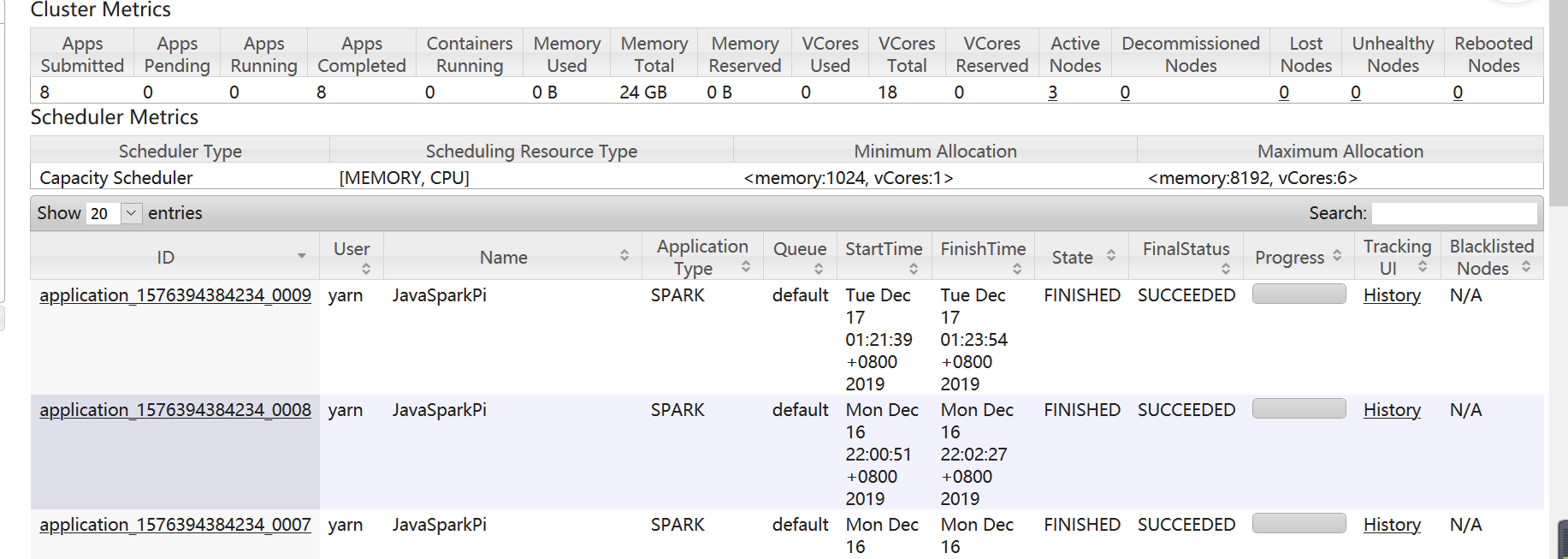

去YARN上查看任务:

常见错误

问题1:yarn日志报错 /var/log/discover/spark.log (No such file or directory)

log4j:ERROR setFile(null,true) call failed.

java.io.FileNotFoundException: /var/log/discover/spark.log (No such file or directory)

at java.io.FileOutputStream.open(Native Method)

at java.io.FileOutputStream.(FileOutputStream.java:213)

at java.io.FileOutputStream.(FileOutputStream.java:133)

at org.apache.log4j.FileAppender.setFile(FileAppender.java:294)

at org.apache.log4j.RollingFileAppender.setFile(RollingFileAppender.java:207)

at org.apache.log4j.FileAppender.activateOptions(FileAppender.java:165)

at org.apache.log4j.config.PropertySetter.activate(PropertySetter.java:307)

at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:172)

at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:104)

at org.apache.log4j.PropertyConfigurator.parseAppender(PropertyConfigurator.java:842)

at org.apache.log4j.PropertyConfigurator.parseCategory(PropertyConfigurator.java:768)

at org.apache.log4j.PropertyConfigurator.configureRootCategory(PropertyConfigurator.java:648)

at org.apache.log4j.PropertyConfigurator.doConfigure(PropertyConfigurator.java:514)

at org.apache.log4j.PropertyConfigurator.doConfigure(PropertyConfigurator.java:580)

at org.apache.log4j.helpers.OptionConverter.selectAndConfigure(OptionConverter.java:526)

at org.apache.log4j.LogManager.(LogManager.java:127)

at org.apache.spark.internal.Logging$class.initializeLogging(Logging.scala:117)

at org.apache.spark.internal.Logging$class.initializeLogIfNecessary(Logging.scala:102)

at org.apache.spark.deploy.yarn.ApplicationMaster$.initializeLogIfNecessary(ApplicationMaster.scala:737)

at org.apache.spark.internal.Logging$class.log(Logging.scala:46)

at org.apache.spark.deploy.yarn.ApplicationMaster$.log(ApplicationMaster.scala:737)

at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:752)

at org.apache.spark.deploy.yarn.ExecutorLauncher$.main(ApplicationMaster.scala:786)

at org.apache.spark.deploy.yarn.ExecutorLauncher.main(ApplicationMaster.scala)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/vdir/mnt/disk1/hadoop/yarn/local/usercache/yarn/filecache/13/__spark_libs__3613082673827864887.zip/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 解决方案:实际上并没有使用spark的log4j配置文件,将/discover/conf/log4j.properties文件挪出。

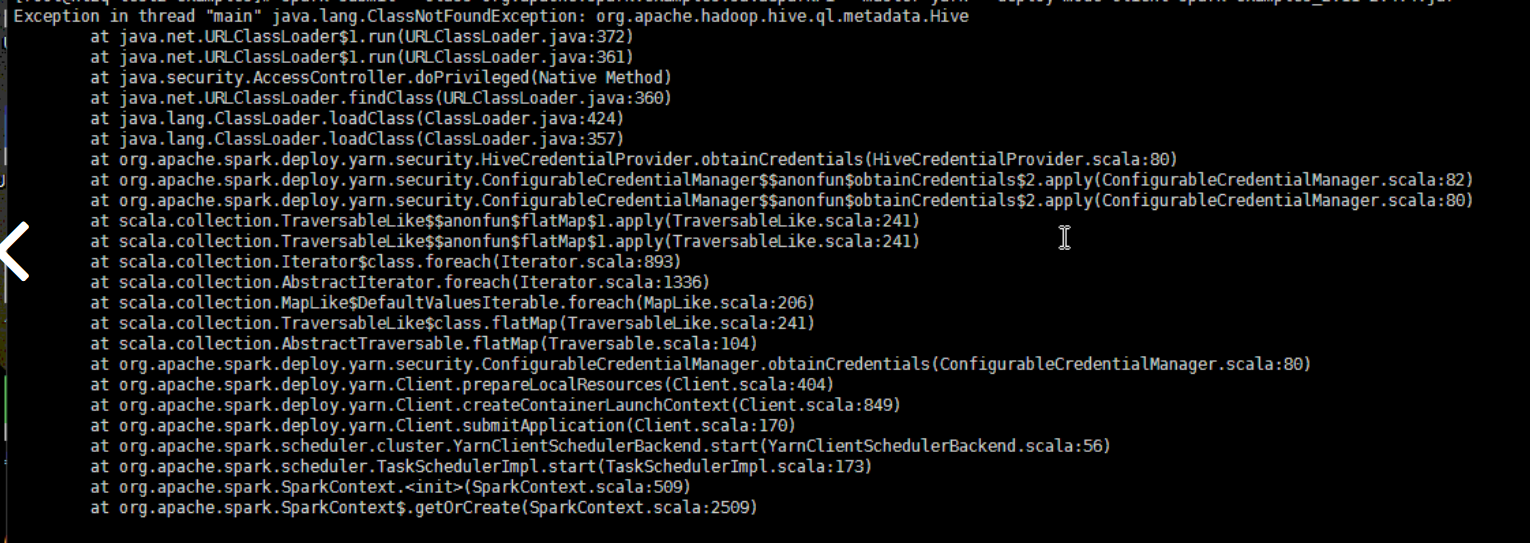

问题2:spark-submit执行报错java.lang.ClassNotFoundException:org.apache.hadoop.hive.ql.metadata.Hive

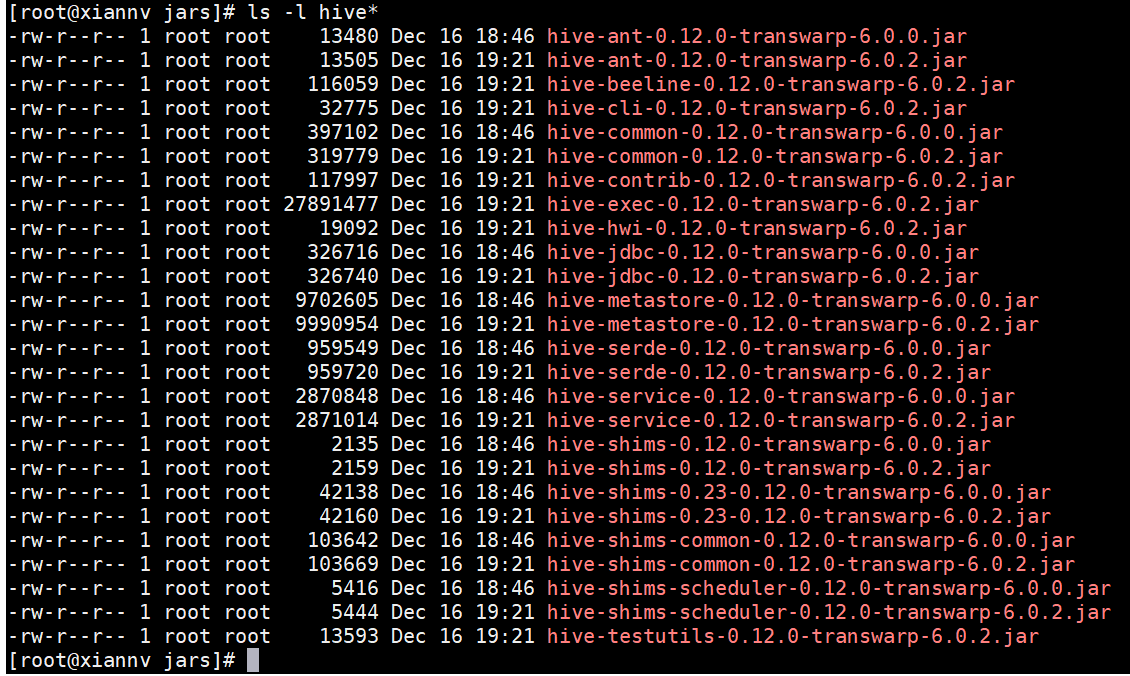

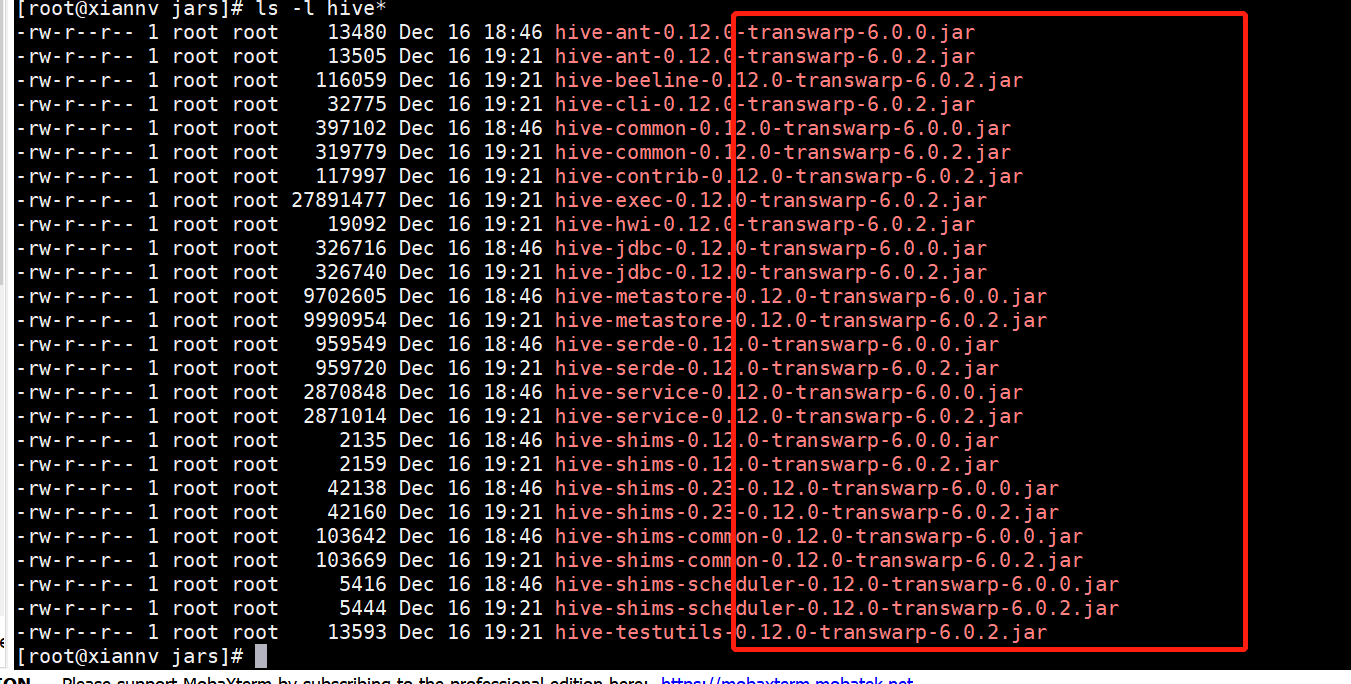

排查/discover/jars文件夹里是否有hive相关的jar包

1)如果没有,可以从TDH-Client/inceptor/lib里拷贝相应jar包过来

2)如果已经存在jar包,确认jar包后面的版本号是否跟集群版本号匹配

一般出现ClassNotFoundException:org.apache.hadoop.hive.ql.metadata.Hive会是集群版本不匹配导致的,此时可以使用如下命令:

mkdir /tmp/hive_jars

cd /discover/jars

mv hive* /tmp/hive_jars

然后再将TDH-Client/inceptor/lib里拷贝相应hive的jar包过来

3)如果直接拷贝TDH-Client/inceptor/lib里的jar包过来,会导致jar包冲突报错

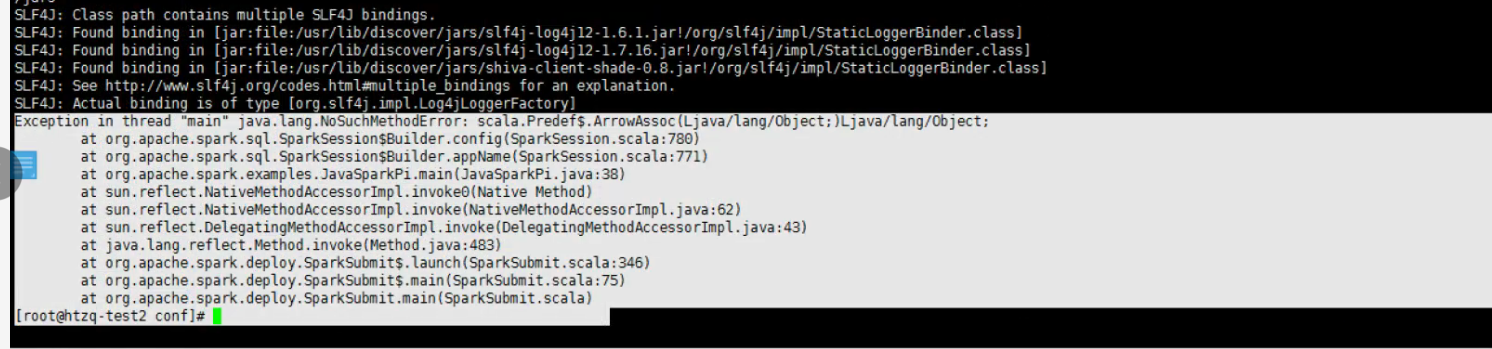

Exception in thread "main" java.lang.NoSuchMethodError: scala.Predef $.$conf