概要描述

在向es中写数据的时候,用到的默认的全局模板,会对文本类型的字段设置为keyword,也就是不分词(not_analyzed),用于做聚合等操作,不分词的字段,它的最大长度和utf-8编码有关,最大长度为32766字节,即32KB,但有些情况下需要插入es表中的数据会超过32KB,直接插入数据会抛异常,本文档针对该情况做相关说明。

注意:该方法对TDH5.2以上版本均适用

详细说明

正常建esdrive表并插入超过32KB的数据报错

-

创建esdriver表

CREATE TABLE es_big_ana(key1 STRING,content STRING) STORED AS ES WITH SHARD NUMBER 3 REPLICATION 1; -

向esdrive表中插入超过32KB的数据

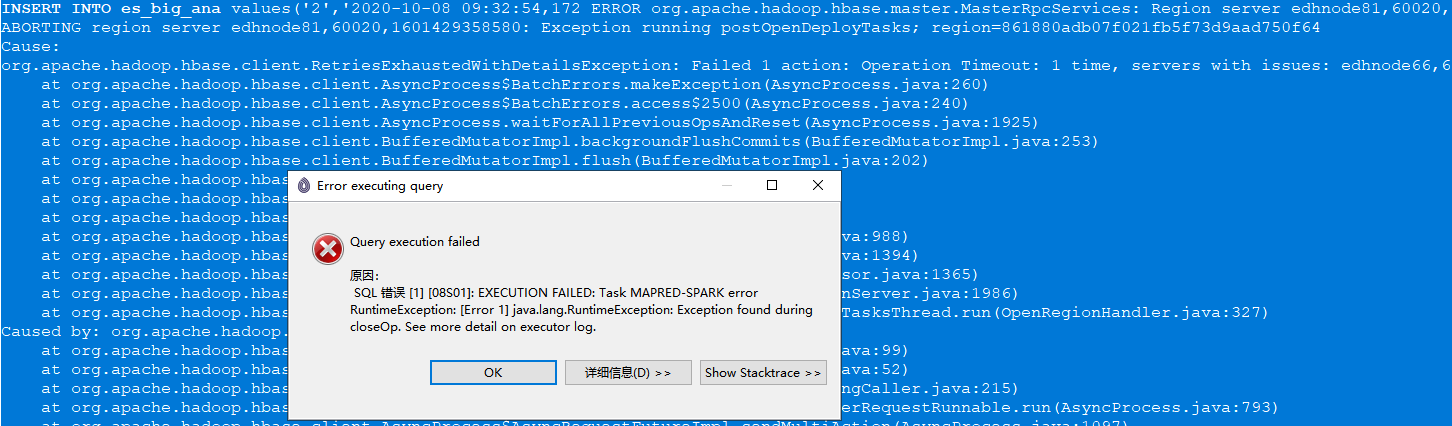

INSERT INTO es_big_ana values('2','2020-10-08 09:32:54,172 ERROR org.apache.hadoop.hbase.master.MasterRpcServices: Region server edhnode81,60020,1601429358580 reported a fatal error: ABORTING region server edhnode81,60020,1601429358580: Exception running postOpenDeployTasks; region=861880adb07f021fb5f73d9aad750f64 Cause: ...... ');上述语句执行报错:

SQL 错误 [1] [08S01]: EXECUTION FAILED: Task MAPRED-SPARK error RuntimeException: [Error 1] java.lang.RuntimeException: Exception found during closeOp. See more detail on executor log. java.sql.SQLException: EXECUTION FAILED: Task MAPRED-SPARK error RuntimeException: [Error 1] java.lang.RuntimeException: Exception found during closeOp. See more detail on executor log. EXECUTION FAILED: Task MAPRED-SPARK error RuntimeException: [Error 1] java.lang.RuntimeException: Exception found during closeOp. See more detail on executor log.

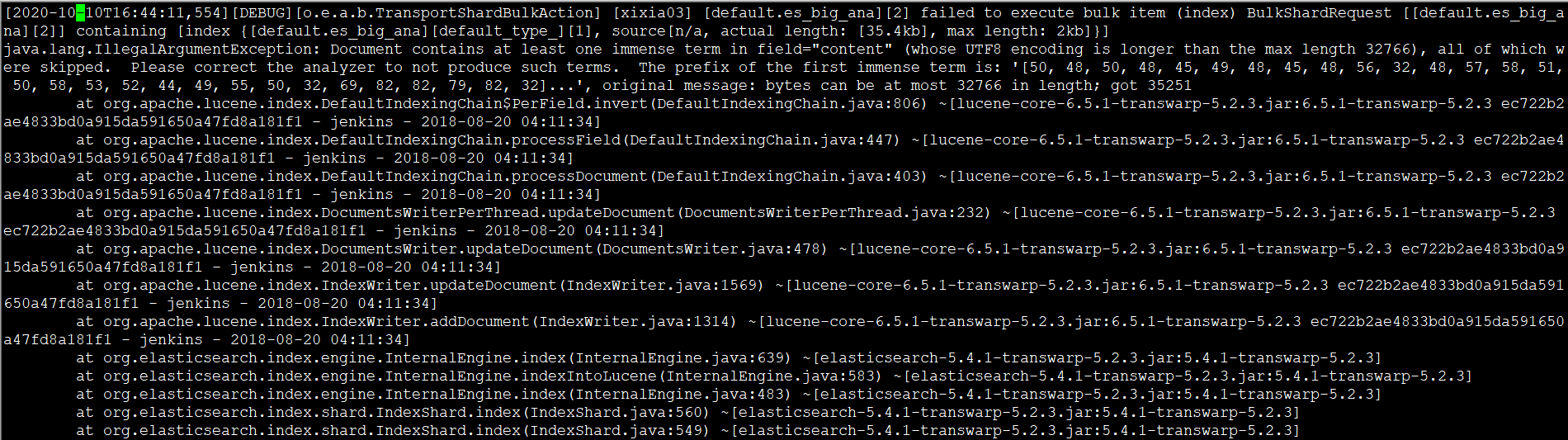

查看search日志发现报错信息:[2020-10-10T16:44:11,554][DEBUG][o.e.a.b.TransportShardBulkAction] [xixia03] [default.es_big_ana][2] failed to execute bulk item (index) BulkShardRequest [[default.es_big_ana][2]] containing [index {[default.es_big_ana][default_type_][1], source[n/a, actual length: [35.4kb], max length: 2kb]}] java.lang.IllegalArgumentException: Document contains at least one immense term in field="content" (whose UTF8 encoding is longer than the max length 32766), all of which were skipped. Please correct the analyzer to not produce such terms. The prefix of the first immense term is: '[50, 48, 50, 48, 45, 49, 48, 45, 48, 56, 32, 48, 57, 58, 51, 50, 58, 53, 52, 44, 49, 55, 50, 32, 69, 82, 82, 79, 82, 32]...', original message: bytes can be at most 32766 in length; got 35251

对字段设置with分词突破32KB大字段限制

- 重新建表对字段设置with分词

CREATE TABLE es_big_ana(key1 STRING,content STRING WITH ANALYZER 'ZH' 'ik') STORED AS ES WITH SHARD NUMBER 3 REPLICATION 1; - insert插入数据

INSERT INTO es_big_ana values('2','2020-10-08 09:32:54,172 ERROR org.apache.hadoop.hbase.master.MasterRpcServices: Region server edhnode81,60020,1601429358580 reported a fatal error: ABORTING region server edhnode81,60020,1601429358580: Exception running postOpenDeployTasks; region=861880adb07f021fb5f73d9aad750f64 Cause: ...... ');可以看到该字段的长度超过了32KB:

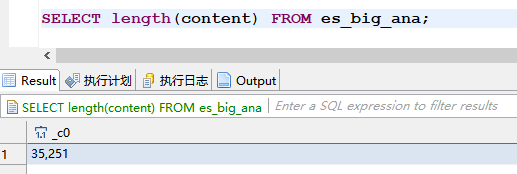

SELECT length(content) FROM es_big_ana;